Algorithmic errors that could cost us a lot. Embarrassingly algorithms make fails more often than you expect.

3 min read

Yes, algorithms make fails. Technology is not just for geeks, but for all of us. That’s a lot of people. Only a few companies such as Apple, Amazon, Microsoft, and Google have control over our wallet.

Do they make decisions by themselves or it is an algorithm involved in the whole process that helps them to make important decisions?

Big companies increasingly rely on algorithms. It doesn’t always work out.

Vignettes that tell tales of companies pushing their technologies forward, ignoring conventional wisdom and social norms fill modern history.

But what happens in today’s modern machine learning, AI-driven world when the algorithms fail?

What happens when the machine isn’t offering advice, but provide a decision? And do it wrong. What to do when the machines are wrong? Who’s liable? Does liability now move from the user to the provider of solutions?

Social media relies on algorithms to match their users with content that might interest them.

But what happens when that process goes messy? When algorithms make fails?

Over the past several years, there have been some serious fails with algorithms. Algos are the formulas or sets of rules used in digital decision-making processes. Now, the question is, do we put too much trust in the digital systems.

There’s one clear standout: the algorithms making the automated decisions that shape our online experiences require more human oversight.

A perfect example is the Facebook News Feed. No one knows how it works that some of your posts show up on some people’s News Feeds or not, but Facebook does.

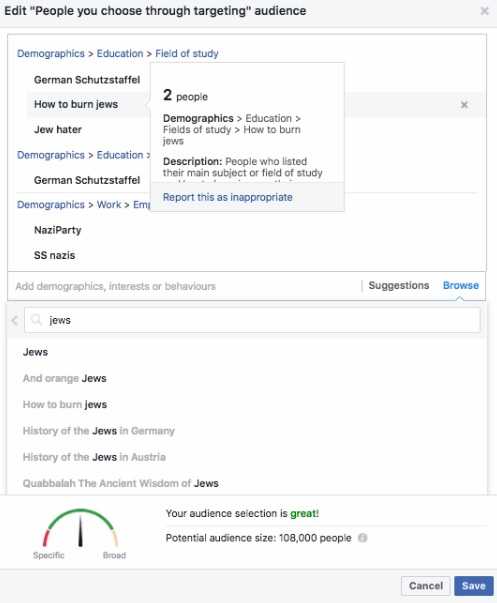

The first case in a string of incidents involved Facebook’s advertising back end. After it was revealed that people who bought ads on the social network were able to target them at self-described anti-Semites.

Disturbingly, the social media’s ad-targeting tool allowed companies to show ads specifically to people whose Facebook profiles used words like “Jew hater” or “How to burn Jews.”

The website paid $30 for an ad that targets an audience that would respond positively to things like “why Jews ruin the world” and “Hitler did nothing wrong.”

It was approved within 15 minutes.

But Facebook’s racist ad-targeting didn’t cause enough for concern.

Instagram was caught using a post that included a rape threat to promote itself.

After a female Guardian reporter received a threatening email, “I will rape you before I kill you, you filthy whore!” she took a screen grab of the message and posted it to her Instagram account. The image-sharing platform then turned the screenshot into an advertisement, targeted to her friends and family members.

Scary and unethical. But it’s an algorithm that makes fails.

Try to tell that to the people that were targeted.

And, how about Amazon showing you related books? Related searches on Google? All of these are closely guarded secrets that do a lot of work for the company and can have a big impact on your life.

It’s logical to ask ourselves, what are the ethics of liability, and who will be responsible if and when algorithms take over?

Human beings have an explanation, no matter how imperfectly, why they took the actions that they did. Simple rule-based computer programs leave a trail. But the cognitive systems cannot explain or justify their decisions.

For example, why did the autonomous vehicle behave the way it did when its brakes failed?

Who is responsible when it is hacked?

Will data companies need insurance coverage for forwarding forecasts or will the usual legalese in marketing and delivery footnotes suffice?

Will they need to advise customers that they should not rely, for business purposes, on the expensive systems they have just purchased?

There are so many questions, but we want to point some algo fails from the recent past.

Algorithms aren’t perfect.

Algo fails and some fail spectacularly. Speaking about social media, a small glitch can turn into a PR nightmare real quick. It’s rarely malicious. This is something that the New York Times calls “Frankenstein Moments.” The situation where the creature someone created turns into a monster.

There are so many examples of how the algorithms make fails.

Everyone who has the profile on Facebook, with no doubt can see its end-of-year, algorithm-generated videos with highlights from the last 12 months.

This example happened in 2014. One father saw a picture of his late daughter. Another man saw snapshots of his home in flames. Other examples show people seeing their late pets, urns full of a parent’s ashes, and deceased friends. By 2015, Facebook promised to filter out sad memories.

The truth is that most of the algorithms fail are far from fatal.

But the world of self-driving cars brings in a whole new level of danger. That’s already happened at least once. A Tesla owner on a Florida highway used the semi-autonomous mode (Autopilot) and crashed into a tractor-trailer that cut him off.

Yes, Tesla quickly issued upgrades. But we have to ask, was it really the Autopilot mode fault? The National Highway Traffic Safety Administration says maybe not since the system requires the driver to stay alert for problems. Now, Tesla prevents Autopilot from even being engaged if the driver doesn’t respond to visual cues first.

One of the examples is the case from Twitter.

There is another example of algorithms make fails. A couple of years ago, chatbots were supposed to replace customer service reps. The aim was to make the online world a chatty place to get info.

Microsoft responded in March 2016 by promoting an AI named Tay. It should provide that people, specifically 18- to 24-year-olds, may interact with on Twitter. Tay, in turn, would make public tweets for the masses.

But in less than 24 hours, Tay became a full-blown racist. She learned from the foul-mouthed masses, obviously.

Microsoft pulled Tay down instantly. She returned as a new AI named Zo in December 2016. But now with “strong checks and balances in place to protect her from exploitation.”

The social media companies are not the only ones afflicted by these algorithms fails. It seems that Amazon’s recommendation engine may have been helping people buy bomb-making ingredients together.

The online retailer’s “frequently bought together” feature might suggest you purchase sugar after you’ve put an order of powder. But, when users buy household items used in homemade bomb building, the site suggested they might be interested in buying other bomb ingredients.

What do these mishaps have to do with algorithms?

The common element in all the algorithms fails is that the decision-making was done by machines. It highlights the problems that can arise when major tech firms rely so heavily on automated systems.

There are legal issues here. And there are ethic issues. There might be basic training in “use the algorithm as input” but the final decision is a human one. And one day, some human is going to make the wrong “human decision.” When an algorithm says “no” and a person cancel it, we all know that the shit is going to hit the fan.

The tide is changing in this area. It comes with increased demands for algorithmic transparency and bigger human involvement. It is necessary to avoid the problematic outcomes we’ve seen in recent years.

But real change is going to require a philosophical shift.

The bottom line

The companies have a focus on growth and scaling. And to fit the massive sizes, they have turned to algorithms. But, algorithms make fails, as we can see.

But algorithms do not exist in isolation. As long as we rely solely on algorithmic oversight of things like ad targeting, ad placement and suggested purchases, we’ll see more of these disturbing scenarios. While algorithms might be good at managing decision-making on a massive scale, they lack the human understanding of context and gradation. And ethic too.

Risk Disclosure (read carefully!)

Leave a Reply